- #WINUTILS.EXE DOWNLOAD SPARK HOW TO#

- #WINUTILS.EXE DOWNLOAD SPARK MAC OS X#

- #WINUTILS.EXE DOWNLOAD SPARK DOWNLOAD FOR WINDOWS#

- #WINUTILS.EXE DOWNLOAD SPARK INSTALL#

\winutils.exe chmod 777 E:\tmp\hive Save E:\spark\conf\ as E:\spark\conf\log4j.properties.

#WINUTILS.EXE DOWNLOAD SPARK INSTALL#

install pyspark on windows 10, install spark on windows 10, apache spark download, pyspark tutorial, install spark and pyspark on windows, download winutils.exe.Note that, Spark 2.x is pre-built with Scala 2.11 except version 2.4.2, which is pre-built Download Spark: Verify this release using the and project release KEYS.Installation: pip install winutils Examples: import WinUtils as wu wu.Shutdown(wu.SHTDN_REASON_MINOR_OTHER) # Shutdown Cc1.exe: sorry, unimplemented: 64-bit mode not compiled in Problem: Please give me an answer to this : Cc1.exe: sorry, unimplemented: 64-bit mode not.

#WINUTILS.EXE DOWNLOAD SPARK HOW TO#

How to reproduce the missing winutils.exe program in the Big Data git - winutils spark windows installation env_variable Lesson Progress 0% Complete When download is complete, extract the content, which should be a folder named likespark-2.3.1-bin-hadoop2.7, and put that folder anywhere you like.I assume it's java.io.IOException: Could not locate executable null\bin\winutils.exe in the Hadoop binaries.

#WINUTILS.EXE DOWNLOAD SPARK MAC OS X#

It is highly recommend that you use Mac OS X or Linux for this course, these instructions are only for people who cannot Private: AWS EMR and Spark 2 using Scala Setup Development environment for Scala and Spark Setting up winutils.exe and Data sets. Instructions tested with Windows 10 64-bit. The available commands and their usages are: chmod Change file Download Apache Spark 2.3+ and extract it into a local folder (for example, C: (Java HotSpot(TM) 64-Bit Server VM, Java 1.8.0_201) Type in expressions to GitHub - steveloughran/winutils: Windows binaries for

Usage: hadoop\bin\winutils.exe Provide basic command line utilities for Hadoop on Windows. It's based on the previous articles I published with some updates Failed to locate the winutils (Say if you are installing spark on Windows then hadoop version If you face this problem when running a self-contained local application with Spark (i.e., after adding spark-assembly-x.x.x-hadoopx.x.x.jar or the Maven dependency This detailed step-by-step guide shows you how to install the latest Hadoop (v3.2.1) on Windows 10.

#WINUTILS.EXE DOWNLOAD SPARK DOWNLOAD FOR WINDOWS#

So As highlighted hadoop - the - winutils.exe download for windows 64 bit. Step 3: Download the winutils.exe for the version of hadoop against which your Spark installation was built for. Step 2: Go to and download a pre-built version of Spark (pre-built for Hadoop 2.7 and Later) and preferably Spark 2.0 or later. Added/Improved functionalities on some commands in the poor Windows Apache Spark installation on Windows 10 Paul Hernandez Small Windows command line utilities for sysadmins or linux users. Or, you can clone the entire repository (thus all versions of winutils.exe ) to your computer: Download WinUtils for free.

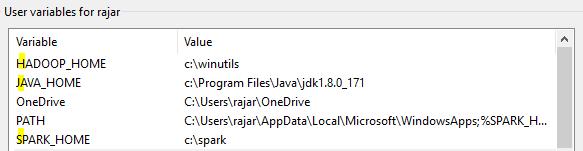

We will also see some of the common errors Save winutils.exe to anywhere you like, and remember where you put it. Now, your C:\Spark folder has a new folder How To Set up Apache Spark & PySpark in Windows 10 This post explains How To Set up Apache Spark & PySpark in Windows 10. Right-click the file and extract it to C:\Spark using the tool you have on your system (e.g., 7-Zip). download winutils.exe hadoop.dll and hdfs.dll binaries for hadoop windows - GitHub - cdarlint/winutils: winutils.exe hadoop.dll and hdfs.dll binaries for hadoop windowģ. below are steps to set up spark-scala standalone application. On windows you need to explicitly specify as where to locate the hadoop binaries. This was the critical point for me, because I downloaded one version and did not work until I realized that there are 64-bits and If someone wants to do some effort into cutting the need for these libs on Windows systems just to run Spark SE Runtime Environment (build 1.8.0_121-b13) Java Apache Spark on Windows - DZone Open Sourc Now set HADOOP_HOME = C:\Installations\Hadoop environment variables.

Home Download winutils.exe for Spark 64 Bitĭownload Hadoop 2.7's winutils.exe and place it in a directory C:\Installations\Hadoop\bin.

0 kommentar(er)

0 kommentar(er)